In the technology world, 2022 has been the year of AI. In the last twelve months, we’ve seen an explosion of AI art, tool, writers, music composers, and AI-based skin analysis. But the undoubted star of the show has been the AI image generator. Across social media, vivid digital illustrations created by a computer and a simple word prompt have slowly replaced photos.

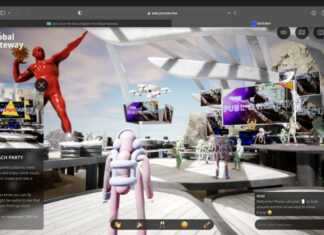

We’re already seeing this technology in the metaverse. Earlier this year, Mona rolled out an AI Material Designer that allows creators on its platform to create textures for objects without using code. Their CEO told The Block: “We are actively working to build and incorporate these types of tools into our creation pipeline for our community. We’re not too far away from users being able to generate assets and entire worlds using AI inside Mona.”

The Metaverse will do less for web 3 than AI. In the next bull run AI will be a bigger buzz word and vastly more beneficial. I’d argue we can’t truly have a Web 3 Metaverse without AI integration.

— Papa Nut (Michael Roberts) (@MichaelRRoberts) December 14, 2022

However, the reception to AI image generation has not been universally positive. This week, the Chinese government has effectively banned the creation of AI-generated media without watermarks. Last week, Adobe began selling AI-generated photos as stock images, threatening creatives’ income. Artists have also revolted at computer-generated images reaching the top of ArtStation’s ‘Explore’ section.

According to its critics, the AI image revolution is here, and it’s coming for artists’ income.

Impersonation In The Metaverse

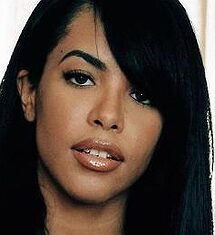

One emerging fear about the use of metaverse AI concerns the manipulation of people’s photos. In a worrying case study, the technology magazine Ars Technica created a fictional man from a collection of only seven photos from a volunteer. With only this small dataset, they were able to put John in a series of compromising photos. This included a pornographic-style photo, a paramilitary-style uniform, and an orange prison jumpsuit. Whilst these examples have a slight uncanny valley appearance to them, a larger dataset, or more sophisticated AI, could produce far more incriminating images.

However, with video now taking up the majority of internet traffic, the biggest risk in the metaverse is not with your photos. As platforms like TikTok have exploded in popularity in recent years, the real jeopardy comes with fully realistic metaverse avatars. In a strange, dystopian near-future, this colossal mine of user-generated video could serve as a huge dataset. Used to create walking, talking representations that – for all intents and purposes – are indistinguishable from the real you.

Catfishing, in which people are lured into relationships with fictional online personas, could take a sinister turn. Instead of stealing a photo or two, why not become them in a virtual world? As the globe spends more of its time online, identity theft is increasing. In the US, the Identity Theft Resource Center reports it increased by 36% in 2021 compared to 2020. Metaverse platforms will have to work hard to ensure the problem isn’t magnified in their AI-fuelled virtual worlds.