3D model generators may be the next innovation to rock the world of AI. Point-E, a machine learning system that generates a 3D object from a text prompt, was made available to the public this week by OpenAI. A paper that was published along with the code base claims that Point-E can create 3D models on a single Nvidia V100 GPU in one to two minutes.

In the conventional sense, Point E does not produce 3D objects. Instead, it creates point clouds, which are discrete collections of data points in space that represent 3D shapes; hence, the playful abbreviation. (The “E” in Point-E stands for “efficiency,” as it purports to be quicker than earlier 3D object generation techniques.)

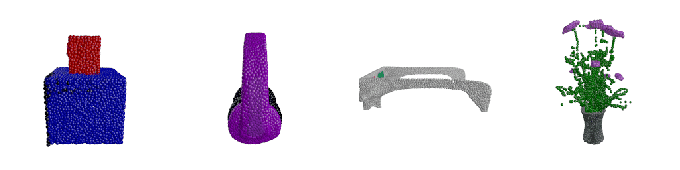

Here is a Sample of the OpenAI 3D Model Generator

Point-text-to-image E’s model creates a synthetic rendered object from a text prompt, such as “a 3D printable gear, a single gear, 3 inches in diameter and half-inch thick,” and feeds it to the image-to-3D model, which creates a point cloud.

Point-E could generate colored point clouds that frequently matched text prompts after training the models on a dataset of “several million” 3D objects and related metadata, according to the OpenAI researchers. It’s not flawless; occasionally Point-image-to-3D E’s model cannot interpret the image from the text-to-image model, leading to a shape that does not correspond to the text prompt. Even so, the OpenAI team claims that it is orders of magnitude faster than the previous state-of-the-art.

“While our method performs worse on this evaluation than state-of-the-art techniques, it produces samples in a small fraction of the time,” they wrote in the paper. “This could make it more practical for certain applications, or could allow for the discovery of higher-quality 3D objects.”

What are the Applications

What exactly are the applications? Once it’s a little more refined, the system might also find use in workflows for game and animation development thanks to the addition of the mesh-converting model.

DreamFusion, in contrast to Dream Fields, doesn’t need any prior training, so it can create 3D models of objects without 3D data.

While all eyes are on 2D art generators at the present, model-synthesizing AI could be the next big industry disruptor. Architectural firms use them to demo proposed buildings and landscapes, for example, while engineers leverage models as designs of new devices, vehicles, and structures.

However, creating 3D models typically takes a while — anywhere from a few hours to a few days.

What kinds of intellectual property disputes might eventually occur is the question. There is a sizable market for 3D models, and artists can sell their original content on a number of online marketplaces, such as CGStudio and CreativeMarket. Point-E, like DALL-E 2, does not mention or give credit to any of the artists who might have had an impact on its generations.

But OpenAI will save that topic for another time. The GitHub page and the Point-E paper both make no mention of copyright.

Read More: SpaceX Creat the Next Generation of Starlink